My friend Max and I were writing chat bots in QuickBASIC in ‘95 because we had no Internet connection and, thusly, couldn’t chat with actual people.

We had heard of IRC though, and seen some chatting going on in cool hacker movies on TV, and thought it must be great fun, and with that we would be right, of course.

We mashed up some overly simple graphics code and strung together random number generators with much too many GOTOs and with all that came lots of funny bugs.

All the un/intended side effects and the super naive building of sentences by jumping around in word lists produced something, we thought, that sometimes almost felt like it had personality.

Laughs were had.

So far, so good.

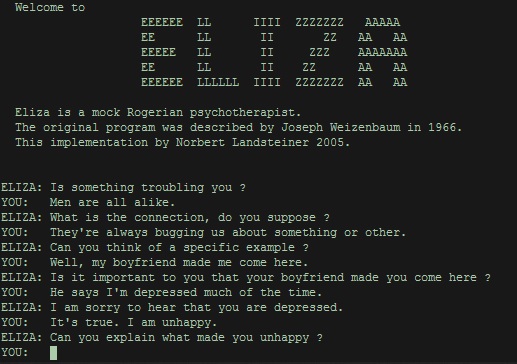

Only much later I learnt of Joseph Weizenbaum’s ELIZA, a similar (albeit far superior) chat program from ca. 1966, and the ELIZA effect which describes

[…] the susceptibility of people to read far more understanding than is warranted into strings of symbols—especially words—strung together by computers.

Here’s a screenshot of a relatively recent re-implementation:

A decade ago or so I had this idea to hook up ELIZA with Facebook and make it chat with people there, but never came round to actually do it. (Facebook was a mostly blue and white forum-like PHP script with lots of advertising that the elderly used, a bit like webmail, to wish each other happy birthday)

Anyhow, here’s the point: Weizenbaum was a smart person, and I’ll quote the Wikipedia quoting him:

Joseph Weizenbaum’s ELIZA, running the DOCTOR script, was created to provide a parody of “the responses of a non-directional psychotherapist in an initial psychiatric interview” and to “demonstrate that the communication between man and machine was superficial”.

Emphasis mine.

(Reminder to self: Watch the movie Weizenbaum. Rebel at Work. and read the paper Joseph Weizenbaum recommended by Drew McDermott: Artificial Intelligence Meets Natural Stupidity)

Society seems to not have a great long term memory, so everything keeps happening over and over, and so of course chatbots have had a comeback, and of course communication with them still is superficial.

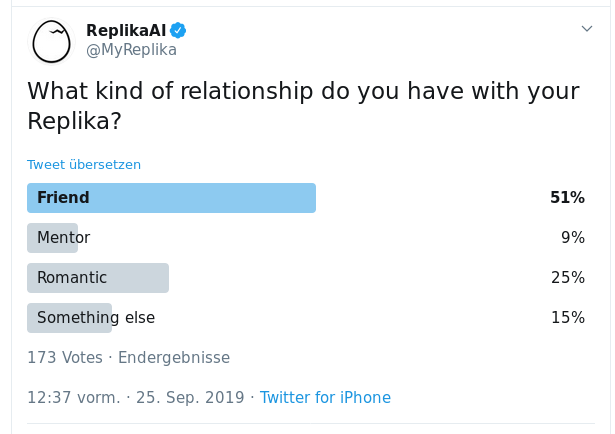

But many people want to believe, and many people want to make money off those who want to believe, so now we have Tess, “a Mental Health Chatbot”, and Replika.AI, “The AI companion who cares”.

This advertising by X2 does look eerily like ELIZA to me. I don’t know about you, but this gives me the chills. And it goes on from there.

Eugenia Kuyda, according to an article in WIRED magazine the creator of Replika.AI, had first created an emotional chat bot as a memorial to her friend who died in a car accident. She fed the chat software real messages from her chat history with her deceased friend. Now, maybe I am the strange one here, and that is not Frankensteinian but instead a totally sweet way to remember a good friend.

But then, Replika is named that way because it mirrors its user. So the company markets a program, designed to become more and more like its user, as a companion that is always there when you’re lonely. I am no psychologist, and I don’t want to be a poor sport, but is this really a safe thing to do?

And then a full quarter of their users report a romantic relationship with this software agent that imitates them? What kind of feedback loop is that? Can that be healthy?

I really am wondering - what has gone wrong that people have so much hate for other people - have you ever been on Twitter? - and then direct their love towards machines?

Update 2022-06-13: Chatbots now seem to gull even their creators into believing they are sentient: Washingtonpost/The Google engineer who thinks the company’s AI has come to life. At a time where a big part of the population still denies animals feelings (so they can exploit them at ease, I guess) many of the same people will seek comfort talking to software. Call me old-fashioned; I find that wrong and disgusting.

Update 2023-01-16: This just flew my way: Motherboard@Vice.com reports ‘My AI Is Sexually Harassing Me’: Replika Users Say the Chatbot Has Gotten Way Too Horny. Rather clear something like this was going to happen (and is going to happen again). I recommend to not let computer programs have power over one’s life.